Working with Remote Data Sources

TopBraid EDG stores all asset collections in its own internal database, which is based on the open-source Jena TDB project. This database is optimized for query speed as it sits close to the query algorithms that drive TopBraid. However, there are scenarios where it is desirable to use external “remote” data bases such as triple stores from commercial vendors.

External triple stores may offer better scalability, i.e. going into billions of triples

Some data may be controlled or also used by other applications than TopBraid

These use cases often involve external knowledge graphs that are edited outside of TopBraid, such as Wikidata, SNOMED, UniProt or other biomedical databases.

Starting with version 7.7, TopBraid EDG offers support for so-called Remote Data Sources. Initial support includes arbitrary data sources that offer an endpoint following the standard SPARQL 1.1 HTTP protocol. Using this feature, TopBraid can seamlessly interact with data stored on external SPARQL databases and treat them as “virtual” asset collections.

Example: Geo Taxonomy uses Wikidata

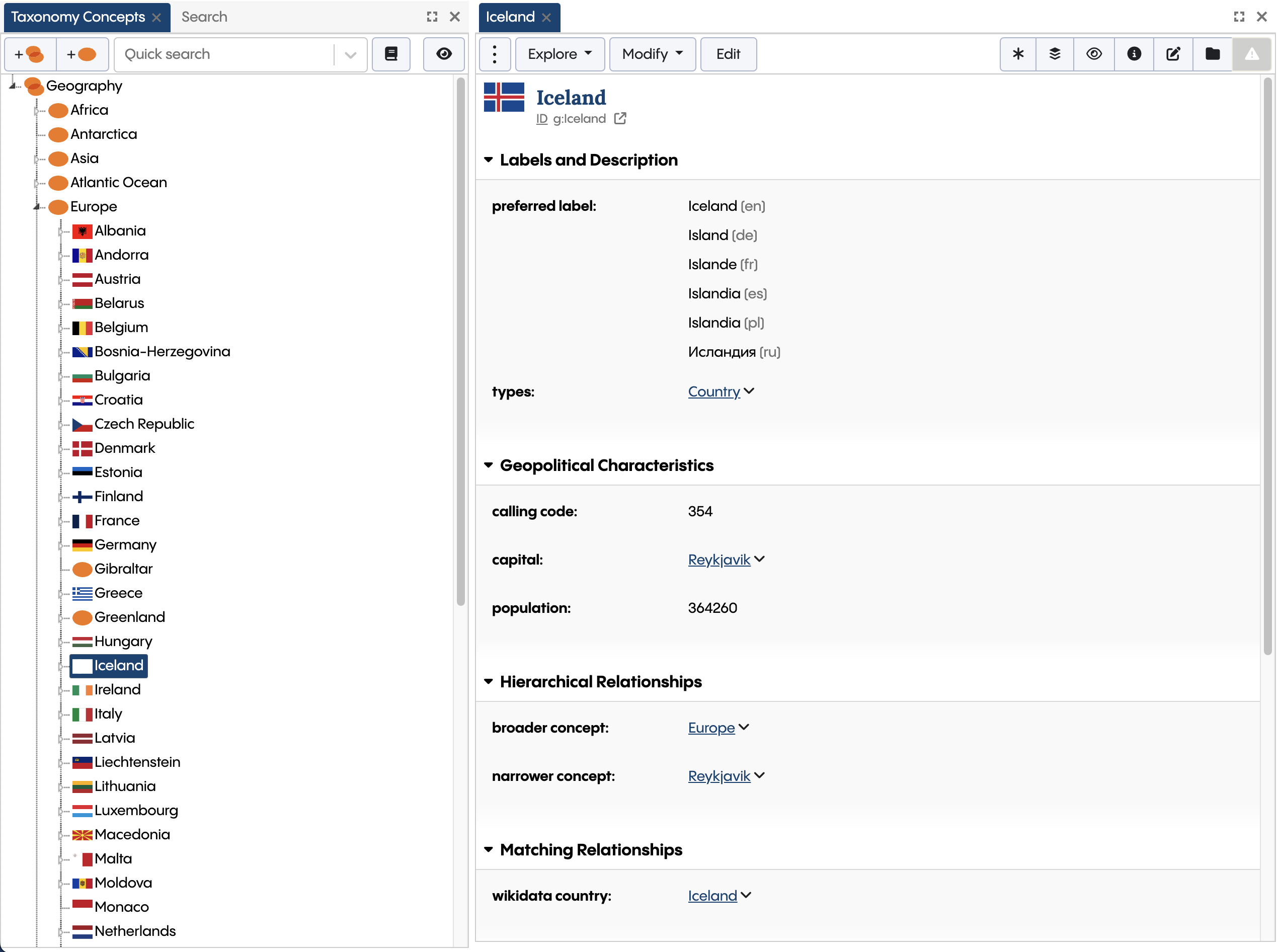

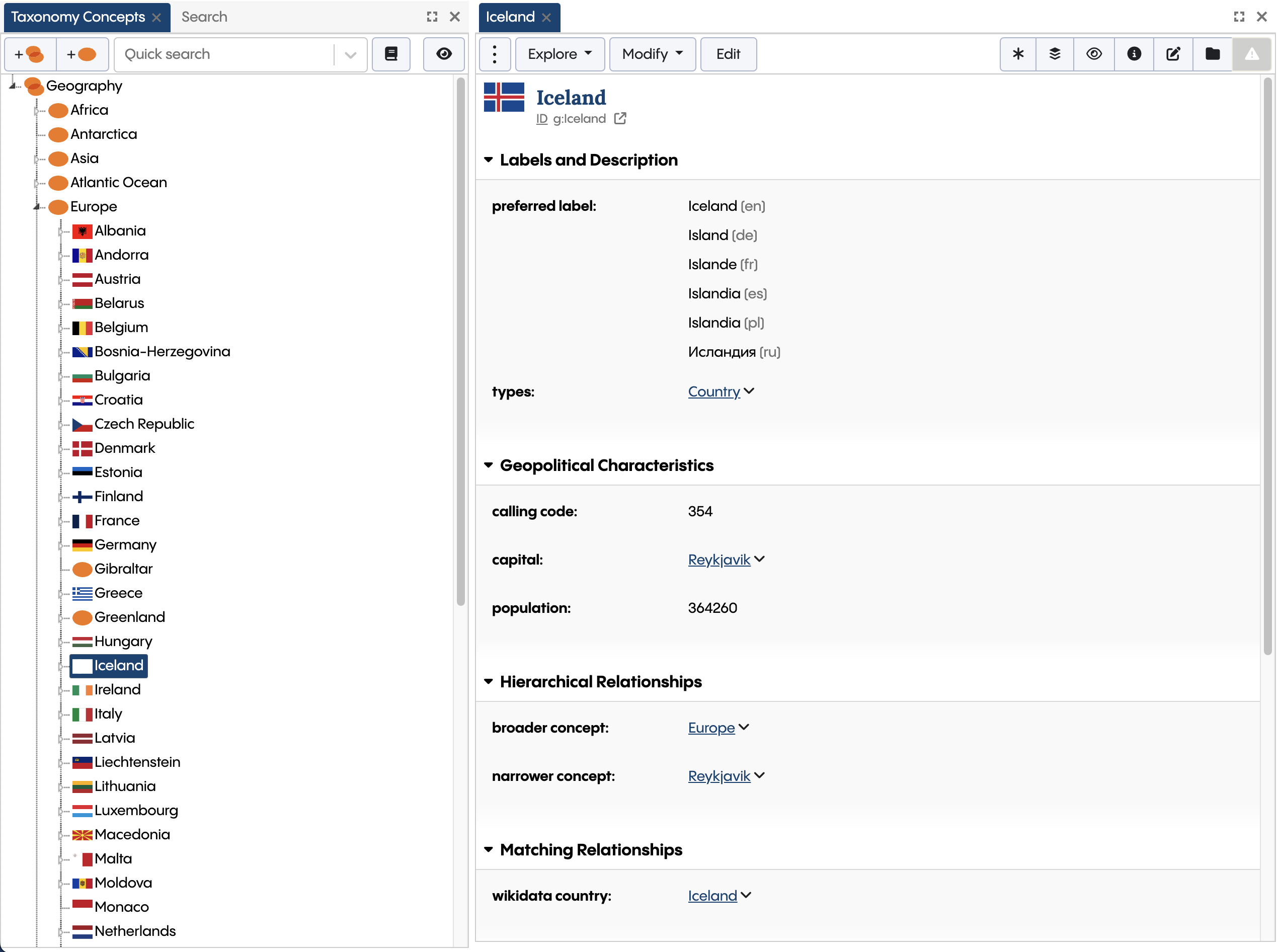

When you have installed the latest version of the Geography Taxonomy from the TopBraid EDG samples, you can see that the Geo Taxonomy has a link from its Iceland asset to a corresponding Iceland entity on Wikidata using the property wikidata country:

The country Iceland from the Geo Taxonomy points at the corresponding Iceland entity on Wikidata

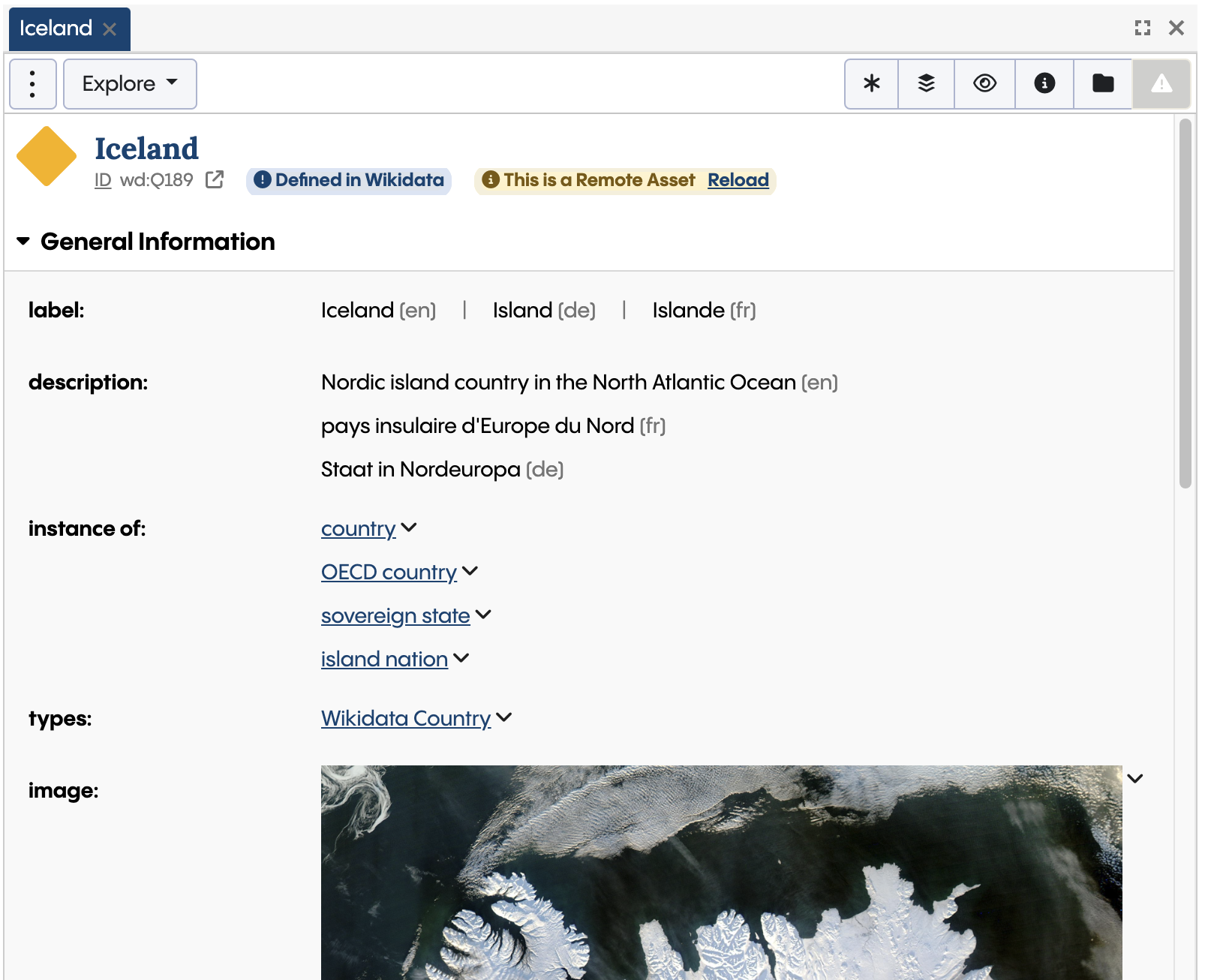

Now, you click on that link, the system will show the form for the remote asset which is, in fact, stored remotely on the public Wikidata endpoint:

The properties of the Wikidata country Iceland

Attention

Assets that are stored on remote data sources such as Wikidata will be loaded into TopBraid’s internal database when needed and then act like any other locally stored asset.

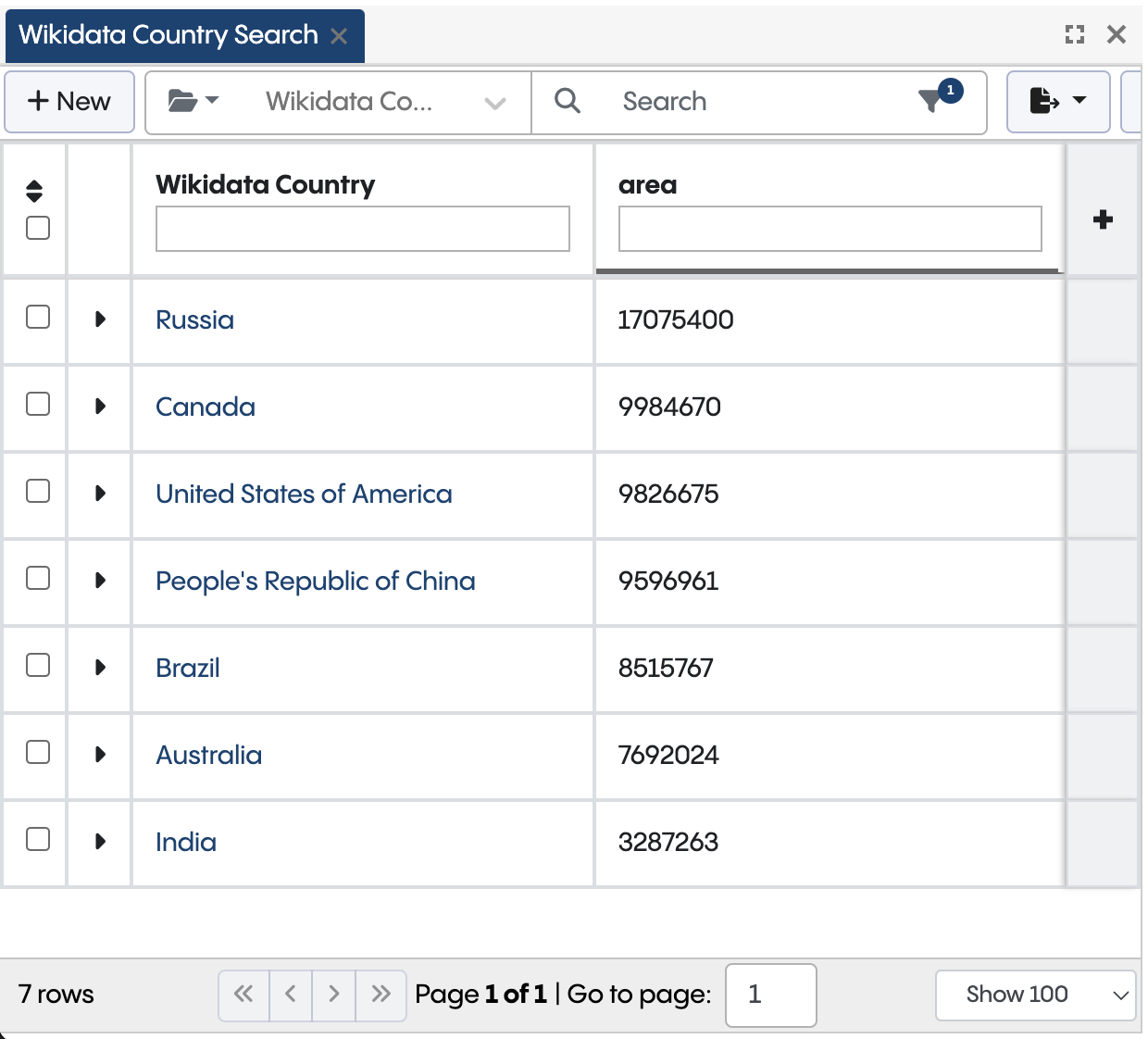

Example: Searching Remote Assets

When you navigate to the Wikidata Data Graph from the TopBraid EDG samples, you can search across various classes such as Wikidata Country using the search panel. TopBraid understands that in order to retrieve matching countries, it needs to issue a SPARQL query against the remote endpoint.

When configured for remote assets, the Search form queries the SPARQL endpoint directly

This capability means that you can now access data sources that are traditionally too large to handle efficiently from TopBraid.

Architecture of Remote Data Sources

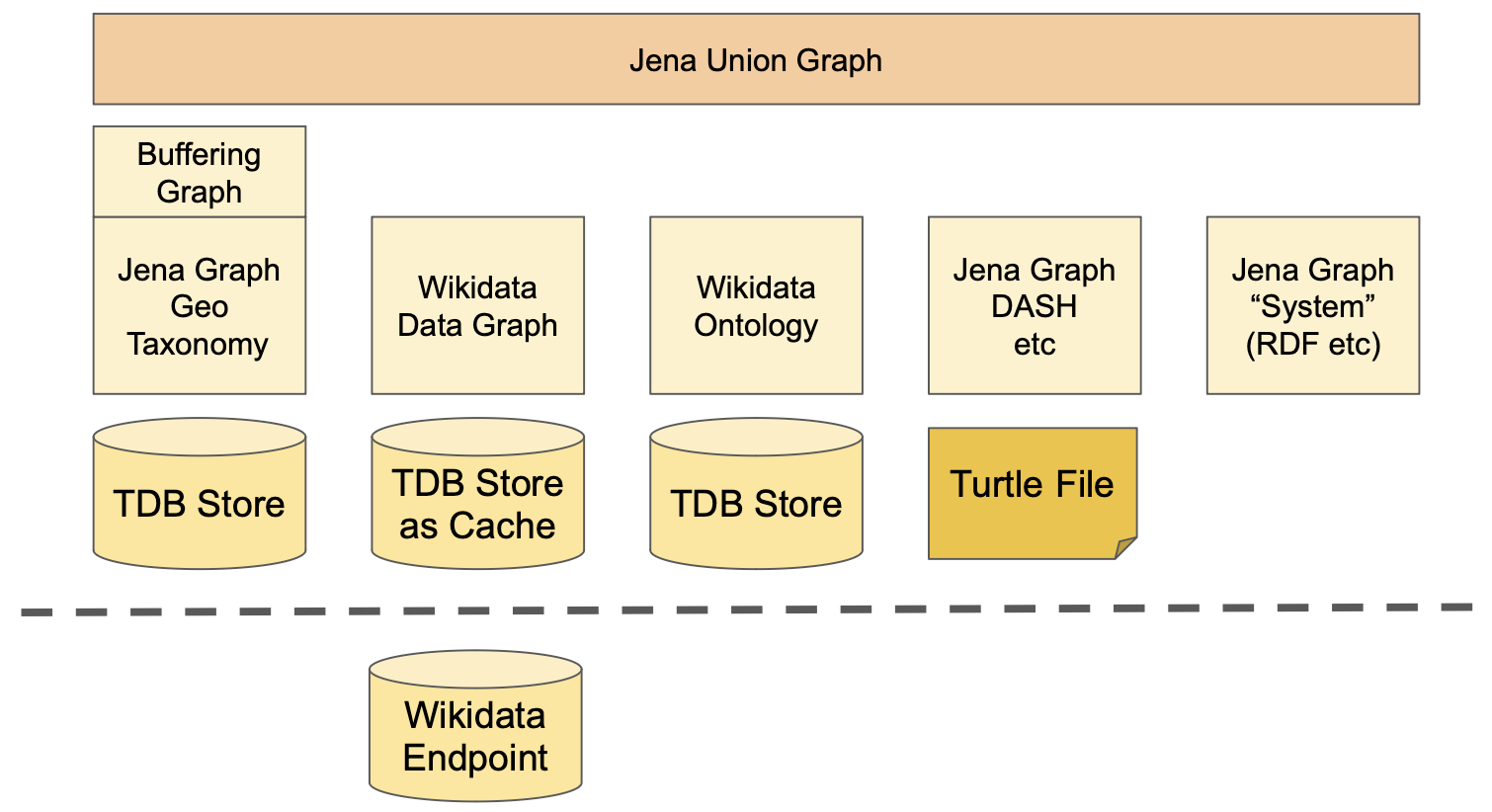

Before we go into the set up processes for remote data sources, it is helpful to understand a bit about TopBraid’s graph architecture. The figure below illustrates the graphs involved in such a scenario. In this example the asset collection “Geo Taxonomy” has been opened by the user. The Geo Taxonomy includes (owl:imports) a Data Graph called “Wikidata” which in turn includes an Ontology called “Wikidata Ontology”.

TopBraid EDG Architecture with Wikidata as a Remote Data Source

The Wikidata Data Graph uses the Wikidata SPARQL endpoint to provide a virtual view on basically any data stored in Wikidata. While all asset collections use the local TDB database, the Wikidata asset collection uses its TDB only as a “cache” of sorts, which is only populated with the subset of Wikidata that is actually relevant. For example, once a user visits a Wikidata asset, TopBraid will

Fetch the label and type(s) of that asset

Based on the type information, load all properties that are defined for the classes

Whenever an asset is loaded, TopBraid remembers the time stamp alongside the other RDF triples. TopBraid uses that time stamp to determine whether an asset is already loaded or not.

Note

TopBraid’s remote asset support means that you can connect your own assets to data stored in external databases without having to copy the whole external database into TopBraid’s local database. TopBraid will only ever “see” the parts that are relevant to the local use case.

The following figure illustrates how this works:

TopBraid's remote storage mechanism uses SHACL shapes to determine which subset of values to load into the cache

The class and shape definitions are already used by TopBraid’s Form, Search and Asset Hierarchy panels, where they act like filters or views on the underlying data. The remote data support relies on exactly the same classes and shapes. So for example, when you browse to a country in Wikidata, it would only download exactly the properties defined for the Country class in the associated ontology, while the bulk of other properties remain on the remote server only. Likewise you can only query instances of classes that are known to be present on the remote server.

Configuring Remote Asset collections

If you want to connect to a SPARQL endpoint, create an initially empty asset collection for it. In many cases you will find that Data Graphs are the most flexible way of working with remote data, but if your remote data is in SKOS, you may also select a Taxonomy.

Note

It is not possible to use Ontologies as remote data. All Ontologies need to be traditional, local asset collections in EDG.

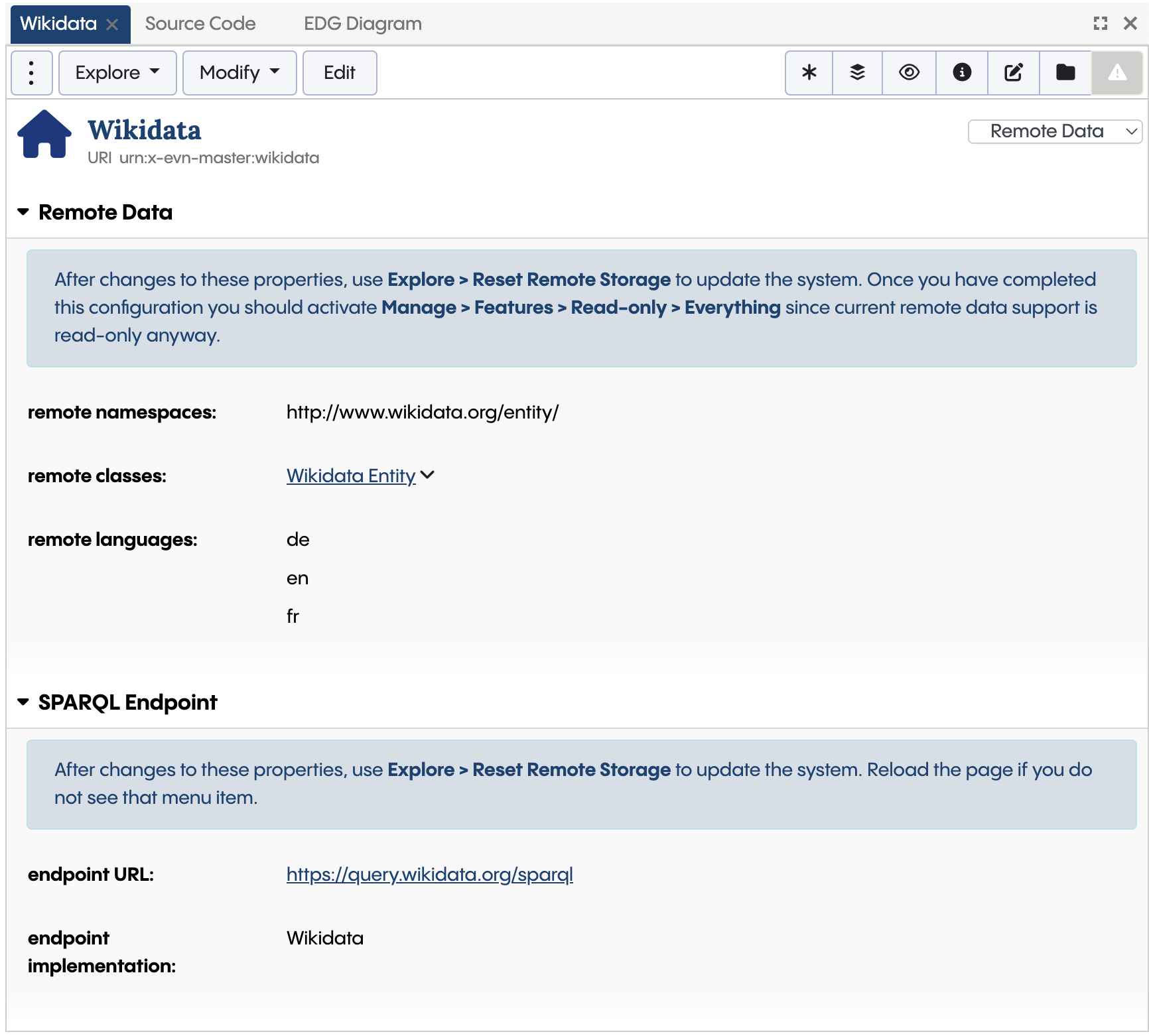

With the newly created asset collection, use the Settings > Includes section to include the graph called TopBraid Remote Data Support. When you back to the “Home” asset of your asset collection, you should then see a new form section *

Use the Remote Data view of the Home asset (here: for the Wikidata Data Graph) to configure access to a remote data source

On that form, start with entering the URL of the SPARQL endpoint. Under endpoint implementation check if your database is listed among the available options. For example, TopBraid has optimizations for GraphDB and Stardog to make use of the faster text search indices of these databases. Also verify if any of the other properties of the SPARQL Endpoint section apply to your database.

Use the property remote is editable if you want users to be able to modify the data on the SPARQL endpoint. By default this is off, meaning that users can only browse.

Then and save the changes and reload the page in the browser. You should then use Explore > Reset Remote Storage… to inform TopBraid that your asset collection shall be treated as a container for remote data. Unless remote is editable is true, this essentially makes the asset collection read-only and disallows any edits except to the Home asset. Instead, TopBraid EDG will manage the updates to this asset collection, for example by loading missing assets when they are needed.

Attention

You need to use Explore > Reset Remote Storage after any change that may affect access to your remote data. This includes changes to the Remote Data section on the Home asset, but also any changes to the Ontologies that describe which classes and instances are present on the remote data source.

With the connection to the SPARQL endpoint just established, the asset collection will be empty by default. You need to use the following properties to instruct TopBraid about which assets and instances of which classes exist on the remote data source:

Remote namespaces must enumerate the namespaces of all assets on the remote data source that you want to use. For example, use

http://www.wikidata.org/entity/for all wikidata entities from that namespace. This information is used by TopBraid whenever a user navigates to a remote asset, e.g. by following a link from a Geography Taxonomy country to its Wikidata sibling. Using these namespaces, TopBraid and the user interface can determine whether it needs to ask the database for missing triples.Remote classes can be used to inform the Search panel and similar features that it needs to query the remote data sources for instances of certain classes. In the case of the Wikidata sample Data Graph, the only remote class is Wikidata Entity, which is the superclass of all other Wikidata classes such as Wikidata Country. Whenever a user searches for Wikidata Countries, the remote endpoint will be queried to fetch matches.

Remote languages can be used to limit which literals will be loaded from the remote endpoint. This is important for cases like Wikidata where each asset has dozens of labels and descriptions, which would quickly overwhelm the user experience and slow down the system if unchecked.

To aid you with these settings, TopBraid includes two menu items in the Modify menu of the Home asset of your remote asset collection:

Add used Remote Classes… will look for any classes that appear in

rdf:typetriples of the SPARQL endpoint and use them to populate the remote classes setting.Add used Remote Namespaces… will look for any namespaces that are used in subjects of the SPARQL endpoint and use them to populate the remote namespaces setting.

In both cases, you may want to post-process the suggested values.

Hint

If you allow editing, make sure that the Default Namespace is one of the remote namespaces, because the create asset dialogs will only allow entering URIs using the remote namespaces.

Again, use Explore > Reset Remote Storage after any change to such values.

Security Concerns for Remote Databases

If a SPARQL endpoint is protected by user name and password, an administrator needs to add the URL of the endpoint

alongside with the user name and password on the Password Management page.

This will store that user name and password in secure storage, preventing normal users from seeing those parameters.

When entering the password, the scope needs to be the short ID of the asset collection for which the URL will be used.

The short ID is the part of the base URI after urn:x-evn-master:.

Hint

As of TopBraid 7.8, support for remote data sources is switched on by default.

However, the system administrator can de-activate it using the disableRemoteData setup parameter.

There is also a setup parameter disableRemoteEditing to generally disable remote editing.

Automated Ontology Discovery for Remote Endpoints

To help you get started with connecting to your SPARQL endpoints, TopBraid includes a convenience feature available under Modify > SHACL Ontology from SPARQL Endpoint… of an Ontology’s Home asset. It will ask you to select an asset collection that is connected to a remote endpoint, and then will issue a number of probe requests to learn about the classes and their properties on the endpoint.

Hint

The automated Ontology creation can be a good starting point when you do not yet have a suitable SHACL-based ontology for your data. You could basically start with an empty Ontology and include that into the remote asset collection, then run the wizard to get started. Note however that this Ontology should only be seen as a starting point and you will likely want to perform manual clean-ups later, esp to rearrange classes into subclass relationships.

You can find and potentially clone this script in the file ontologyscripts.ttl.

Please contact TopQuadrant if you have suggestions on how to improve it.

Controlling when Remote Assets will get loaded

TopBraid’s user interface will recognize remote assets and load missing properties when needed. For example, the Search panel understands that it needs to query the remote database via SPARQL when you search for instances of a class that is known to exist on the remote endpoint.

For cases where the automatic loading of assets is not sufficient, TopBraid provides control over which assets are loaded into the local storage.

There is programmatic control from the API methods loadRemoteResources and isRemoteResource from the tbs namespace.

For individual assets that may have gotten out of data, use the Reload link next to This is a Remote Asset in the header of the form, or the similar Explore > Load Remote Data….

From the Home asset, use Explore > Load All Remote Assets… as a batch process to load all instances of the configured remote classes into TopBraid’s own database.

From the Home asset, use Explore > Load All Linked Remote Assets… to load all assets that are actually referenced by locally defined assets. For example, use this to load all Wikidata Countries that are linked from Geo Taxonomy Countries.

For programmatic access, see tbs:loadRemoteResources as one entry point.

Remote Data Matching

A frequent use case of external data sources such as Wikidata or SNOMED is as a repository of reference data where each reference data item is identified by a distinct key. For example, all Wikidata Countries have an ISO 3611-1 alpha-2 code, such as “au” for Australia. A local asset collection such as the Geography Taxonomy can use the same identifiers, making it possible to define an indirect linkage between local assets and those from remote sources.

TopBraid’s Remote Data Matching feature makes the use of such linkage easy. Let’s look at the Geography Ontology as an example of how to set this up. When you navigate to the class Country which is a subclass of Geo concept and also Concept, follow the link to the wikidata country property shape. This property is used to link from (local) instances of Country to (remote) instances of Wikidata Country.

On the form of this property shape, scroll to the Remote Data Matching section. This section has two properties:

The local match property is the property holding the local values, here: ISO ALPHA-2 country code (

g:isoCountryCode2).The remote match property is the property at the instances of the remote class, here: ISO 3166-1 alpha-2 code (

wdt:P297).

This is typically one of the properties defined at the class that is the sh:class of the surrounding property shape, here: Wikidata Country.

The interpretation of these properties is that each time a (local) Country has a value for the local match property, the system will ask the SPARQL endpoint for matching values of the remote match property. When found, the value of the link property (here: wikidata country) will be updated automatically, making it easier for users and algorithms to navigate into the matching remote data.

Hint

The optional property local value transform may be used in scenarios where there is no exact match between local and remote values.

For example, if the local ISO codes would be in lower-case notation, you can employ a transformation function sparql:ucase to have

the local values upper-cased before they are matched to the remote values.

For programmatic access, there is a multi-function tbs:remoteMatches.

Extending Wikidata

The Wikidata Sample is the recommended starting point if you want to use Wikidata within TopBraid EDG. It consists of:

The Wikidata Data Graph that is initially empty and incrementally populated as a local cache of the remote entities.

The Wikidata Ontology that defines the classes and properties that shall be used from the remote Wikidata server.

The file Wikidata Shapes (

http://datashapes.org/wikidata/) primarily declaring the Wikidata Entity (wikidash:Entity) base class.

You may use the Wikidata Ontology, or your own extension or variation of it, to define additional classes and properties for the domain of your interest.

Note that Wikidata is different from most other RDF data sources in that it does not use the same notion of classes and types that is known

from RDF Schema, OWL and SHACL.

Instead of using rdf:type it uses its own property wdt:P31.

Instead of using rdfs:Class and owl:Class, the Wikidata classes are themselves just ordinary wikidata entities on instance level.

The TopBraid Wikidata support knows about this design pattern, and uses proxy classes from the wikidash: namespace to represent

classes of interest to the user.

The easiest procedure to get started with adding your own Wikidata classes is:

1. In the Wikidata Ontology, create a new subclass of Wikidata Entity.

We recommend using class labels starting with Wikidata.

The Wikidata Ontology uses the wikidash: namespace and you are invited to use the same if you plan to possibly

share your class definitions with TopQuadrant in the future.

In this case, for example, create class Wikidata City with URI http://datashapes.org/wikidata/City.

2. Use Modify > Add property shapes from Wikidata sample… to open a dialog.

3. In this dialog enter the Qxyz number of a suitable sample city, e.g. Q3114 for Canberra and press Load.

4. Once it has loaded, select the most suitable Wikidata class for City, e.g. city.

This will be saved as value for wikidash:targetClass at your new class, telling TopBraid that all instances of city shall be

loaded as (RDF) instances of Wikidata City.

5. Select the properties that you are interested in, e.g. country.

These will become property shapes at the new class.

You can re-run this process later to add other properties.

Once again, note that you should go to Explore > Reset Remote Storage on the Home asset of the Wikidata Data Graph after any change to the Wikidata Ontology.

Hint

Once you are happy with your class definitions, feel free to contact TopQuadrant to have your extension added to the standard Wikidata Sample Ontology. This is also why we suggest using the same namespace for your own samples.

By the way, the Problems and Suggestions feature will try to find missing values for all properties that include wikidash:Entity among their

allowed classes.

Querying Remote Data with GraphQL

TopBraid’s GraphQL engine is aware of graphs that are backed by a remote endpoint. It uses the declared remote classes and remote namespaces to determine if certain resources originate from a remote data source. If a request asks for instances of a remote class, the engine will try to find those instances using a SPARQL query that directly goes against the remote endpoint. For all found matches, it will then proceed caching the base info (labels and types) and, if needed for the rest of the query, also the other properties based on SHACL property shape declarations.

While this means that you do not need to make changes to your queries to work with remote data, it also may cause some queries to become significantly slower, at least for the first time until sufficient data is cached. In particular be mindful that nested queries against remote assets will each be translated into an individual SPARQL query, and those SPARQL queries may accumulate.

Attention

When you query large numbers of remote resources using GraphQL, the matching triples will all be copied into the local copy for TopBraid. The user interface typically limits such queries to 1000 instances at most, to avoid overloading the local database. You may want to do the same in your own queries, or directly use the query services provided by the remote database.

For free-text searches and auto-complete, TopBraid will attempt to rely on text indices provided by the database implementation where available.

If you only want to query the data from the local cache and avoid remote queries, set the optional query parameter skipRemote to true.

Querying Remote Data with SPARQL

The default SPARQL engine of TopBraid will only operate on the triples that are available in its local database. Therefore, triples that are only stored on the remote database will not be “seen”. However, you have various ways to still query this data:

From SPARQL, use the

SERVICEkeyword.From ADS, use the function

dataset.remoteSelectto directly query the endpoint.From ADS (or SWP), use

tbs:loadRemoteResourcesprior to running SPARQL queries to force relevant data into the cache.

Validating Remote Data with SHACL

TopBraid’s SHACL engine currently only operates on the data that is present in its local database. Uncached triples that are stored on the remote database only will not be used for validation.

To validate remote data in its own database, either use the SHACL support for the specific database or make sure that all relevant assets have been loaded into the local database, for example using Explore > Load All Remote Assets.

Updating Remote Data

Unless you have activated remote is editable, asset collections backed by remote data are considered read-only. TopBraid takes control over which triples are loaded from the remote data source into its local cache but usually never modifies the remote data itself.

The suggested workflows for this set-up is that you control the updates to the remote data sources outside of TopBraid.

For example, you may want to periodically reload your copy of SNOMED when a new version has come out.

In such cases, you should invoke the service tbs:resetRemoteStorage to make sure that all local caches are cleared.

You could follow this with calls to tbs:loadRemoteResources to repopulate the local caches if that makes sense,

i.e. if it doesn’t take too long or would overwhelm the local database.

To programmatically perform other updates, use the ADS function dataset.remoteUpdate.

If you have activated remote is editable, GraphQL mutations and ADS scripts will write through to the underlying remote database. TopBraid will first update its internal database and then issue a SPARQL UPDATE to tell the remote endpoint about the added and deleted triples. This means that, if the remote database is unstable, in some cases the two databases may get out of synch. In those cases, you may want to reset the local database so that it reloads a fresh copy from remote.

While almost all typical operations from users will make sure that all edits to the local database will also be written into the

corresponding remote databases, there can be operations that only update the local database.

In particular, updates against the SPARQL endpoint will only affect the local database unless you operate on named graphs

that include the user name, such as urn:x-evn-master:geo:Administrator.

Attention

If the remote database has any kind of inferencing activated, the synchronization between the remote data

and the local copy that TopBraid has may get out of sync.

For example, some databases support rdfs:subPropertyOf inferencing and if the editor asserts a value for a super-property

then the database would automatically also infer the same value for the sub-property.

However, in that case, TopBraid’s local copy would not know about the additional triple, causing inconsistencies.

So: editing a remote database that has inferencing activated is discouraged.